You can now try out Google’s latest AI model, Gemini 2.0 Flash (experimental), directly in Google AI Studio or by using your own local GUI in Open WebUI. To connect to the API through a pipeline in Open WebUI, follow these steps:

- Install Open WebUI using Docker: Follow the instructions in the “Quick Start with Docker” section of the Open WebUI documentation: https://docs.openwebui.com/getting-started/quick-start#quick-start-with-docker-

- Install Pipelines: Install pipelines as described in the “Quick Start with Docker” section of the Open WebUI documentation: https://docs.openwebui.com/pipelines/ . Important: Ensure you use

host.docker.internalinstead oflocalhost. - Add the Google Manifold Pipeline: In the Open WebUI admin panel, navigate to Admin Panel > Settings > Pipelines tab. Add the following pipeline URL: https://github.com/open-webui/pipelines/blob/main/examples/pipelines/providers/google_manifold_pipeline.py

- Add your Gemini API Key: Obtain your Gemini API key from https://aistudio.google.com/apikey and paste it into the Pipelines tab in Open WebUI.

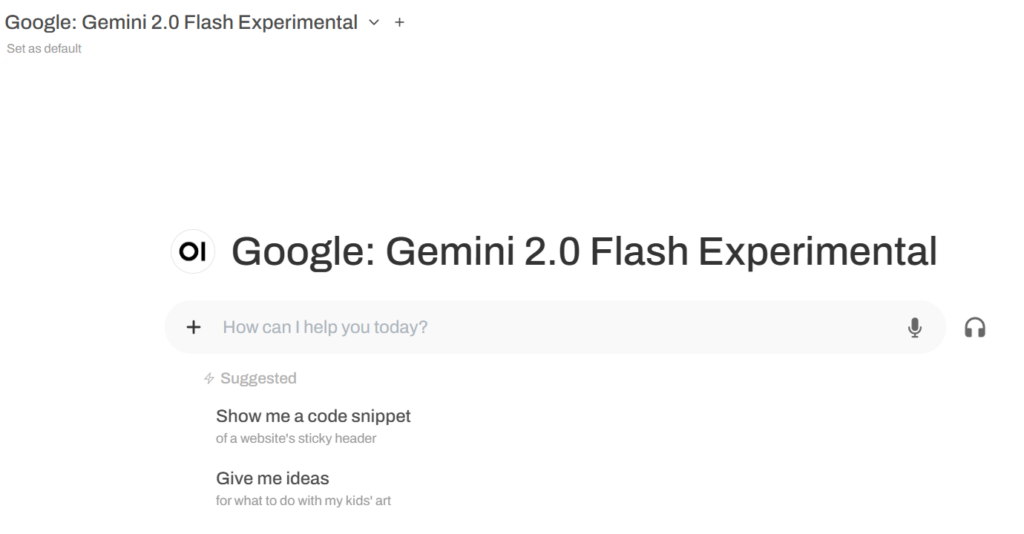

- Start Chatting: When you initiate a new chat, the Google AI models will appear in the model selection list, including Gemini Flash 2.0 (experimental). Select it and start your conversation.

My initial impression is that it handles both coding and text well, and it’s blazingly fast. So, I’ll definitely use it more, and it’s great that Google is making progress.